Check Point® Software Technologies Ltd.(link is external) has been recognized on Newsweek’s 2025 list of America’s Best Cybersecurity Companies(link is external).

The importance of app stability cannot be overstated. Today, more consumers purchase items or services online and more employees use business apps as part of their day-to-day work. While B2C apps offer new ways for customers to engage with brands and help drive growing revenue, B2B apps offer enterprises the opportunity to modernize operations without significant training. In short, apps are more prevalent than ever in both our personal and work lives, so it's critical that organizations deliver an error-free app experience.

While stability is a KPI owned by engineering organizations and gaining ground, it has a significant impact on overall business performance and growth. Simply put, users can't stand it when an app stalls or crashes. One bad experience can lose someone forever. And with social media and app store reviews and ratings, that one bad experience can have a ripple effect that reaches more than just the original users, severely harming a business's bottom line.

To provide engineering teams with hard data on how their apps compare to others in the industry, Bugsnag recently announced the results of its new report, Application Stability Index: Are your Apps Healthy? In order to ensure accurate insights, we drew on a wealth of data, analyzing the performance of approximately 2,500 top mobile and web applications (as defined by session volume) within our customer base. This included data from eCommerce, media and entertainment, financial services, logistics and gaming companies, among other verticals. Hopefully, this data can serve as a benchmark to help engineering teams determine their own application stability SLAs and SLOs and provide guidance about when to build features vs. fix bugs based on the app's current stability.

We viewed the results from the lens of "five nines," the goal infrastructure and operations teams have for 99.999% app uptime and availability. From this vantage point, while the data showed the average mobile and web app have achieved strong stability scores, there's still room for improvement. Here's a deeper look at what we discovered about mobile and web app stability.

Mobile App Stability

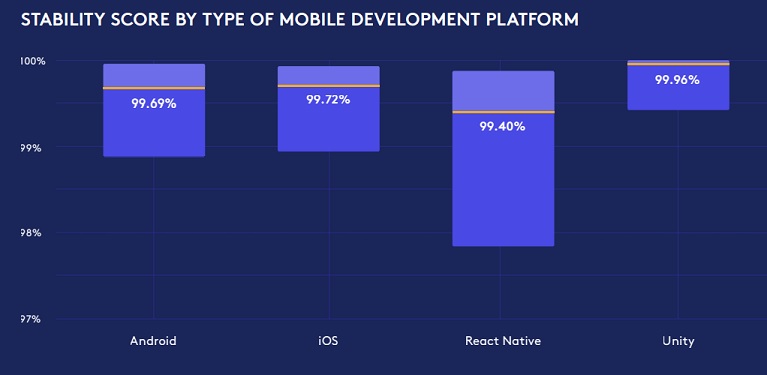

The report evaluated apps from several mobile development platforms, including Android, iOS, React Native, and Unity. Stability scores were negatively impacted by session-ending events, which include things like crashes as well as ANRs (Application Not Responding) in Android, React Native, and Unity applications and OOMs (Out of Memory) in iOS applications.

Overall, the median stability score of mobile apps came in at 99.63%. This means that nearly one out of every 250 customers could be having a completely broken experience with a mobile application. Compared to the "five nines" standard, a median stability of 99.63% indicates that engineering organizations have a clear opportunity to commit more resources to measuring and improving app stability and customer experience.

Here's how the four mobile development platforms stacked up:

Android and iOS native applications tend to have a high median stability because there are very specialized developers working on these apps who have the expertise required to understand and address any stability issues effectively. Compared to iOS applications, Android apps tend to have a slightly lower median stability because Android presents a much less constrained development environment. Increased fragmentation of Android devices makes it more difficult to test applications whereas iOS development teams only need to provide a stable experience on a limited number of devices that Apple releases every year.

Web App Stability

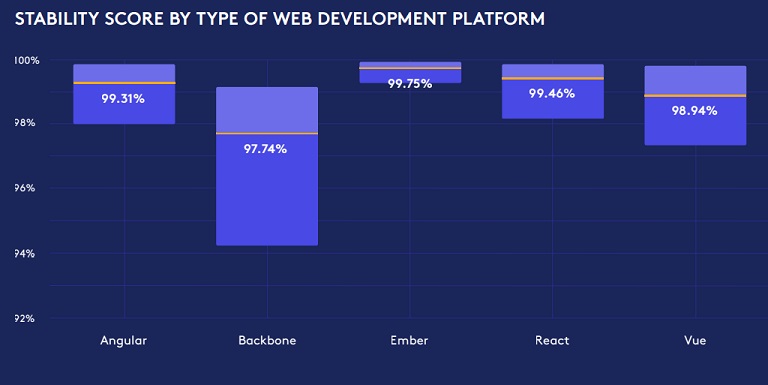

The report evaluated five front-end development platforms, including Angular, Backbone, Ember, React, and Vue. Web stability score was determined by unhandled exceptions, such as a bug which prevents the entire page from rendering, an event handler bug which causes the user interaction to fail, an unhandled promise rejection warning, and others.

Overall, web apps had a median stability score of 99.39%, lower than mobile apps. The difference between web and mobile app stability may be driven by the fact that monitoring and addressing client-side issues in JavaScript applications generally requires more effort than doing so in mobile applications. Also, since mobile apps are newer, there's more of an emphasis on managing errors from the get-go, whereas web is an older discipline that had to learn this over time.

Angular, Ember, React, and Vue are modern, opinionated JavaScript frameworks that are built with consideration for error handling. Angular and React were created and sponsored by development teams at Google and Facebook. Engineering organizations working with these platforms have access to the resources and documentation they need to investigate and fix errors that may affect application stability. On the other hand, Backbone is an older and less opinionated web development framework. Dev teams don't have access to the same coding guidelines, best practices, and considerations for error handling that the other more recent development frameworks offer, which may explain the lower median stability and wider range for Backbone apps.

New Features Must be Balanced with App Stability

App stability plays a crucial role in driving broad business outcomes, impacting conversion rates, engagement, loyalty, developer productivity, and competitive advantage. While delivering new features at a steady pace is also extremely important, these features will provide little value if an app is frequently crashing. Organizations must balance the need for new functionality with the need for an error-free experience.

Industry News

Red Hat announced enhanced features to manage Red Hat Enterprise Linux.

StackHawk has taken on $12 Million in additional funding from Sapphire and Costanoa Ventures to help security teams keep up with the pace of AI-driven development.

Red Hat announced jointly-engineered, integrated and supported images for Red Hat Enterprise Linux across Amazon Web Services (AWS), Google Cloud and Microsoft Azure.

Komodor announced the integration of the Komodor platform with Internal Developer Portals (IDPs), starting with built-in support for Backstage and Port.

Operant AI announced Woodpecker, an open-source, automated red teaming engine, that will make advanced security testing accessible to organizations of all sizes.

As part of Summer '25 Edition, Shopify is rolling out new tools and features designed specifically for developers.

Lenses.io announced the release of a suite of AI agents that can radically improve developer productivity.

Google unveiled a significant wave of advancements designed to supercharge how developers build and scale AI applications – from early-stage experimentation right through to large-scale deployment.

Red Hat announced Red Hat Advanced Developer Suite, a new addition to Red Hat OpenShift, the hybrid cloud application platform powered by Kubernetes, designed to improve developer productivity and application security with enhancements to speed the adoption of Red Hat AI technologies.

Perforce Software announced Perforce Intelligence, a blueprint to embed AI across its product lines and connect its AI with platforms and tools across the DevOps lifecycle.

CloudBees announced CloudBees Unify, a strategic leap forward in how enterprises manage software delivery at scale, shifting from offering standalone DevOps tools to delivering a comprehensive, modular solution for today’s most complex, hybrid software environments.

Azul and JetBrains announced a strategic technical collaboration to enhance the runtime performance and scalability of web and server-side Kotlin applications.

Docker, Inc.® announced Docker Hardened Images (DHI), a curated catalog of security-hardened, enterprise-grade container images designed to meet today’s toughest software supply chain challenges.

GitHub announced that GitHub Copilot now includes an asynchronous coding agent, embedded directly in GitHub and accessible from VS Code—creating a powerful Agentic DevOps loop across coding environments.