JFrog announced a new machine learning (ML) lifecycle integration between JFrog Artifactory and MLflow, an open source software platform originally developed by Databricks.

EMA’s survey team is in the final stages of launching my latest research, “Automating for Digital Transformation: Tools-Driven DevOps and Continuous Software Delivery.”

Preliminary data should be in (and shared with survey sponsors) around the beginning of November 2015, with my research paper on the topic out by November 26 (US Thanksgiving). We’ll present a webinar summarizing the key findings at 2 p.m. ET on December 1. So stay tuned for additional details as they become available.

While I’ve been researching and writing about DevOps and Continuous Delivery for more than five years, they now seem more relevant than ever. As the pace of business continues to accelerate, the expectations placed on IT organizations continue to escalate. And although we typically think about DevOps and Continuous Delivery in the context of IT, it is clear that this momentum is being driven by Line of Business.

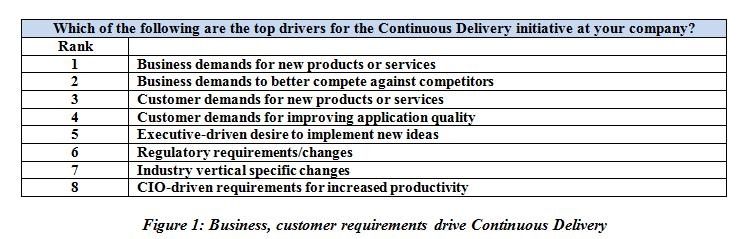

As Figure 1 shows, EMA’s 2014 research on the topics of DevOps and Continuous Delivery found that the top four drivers for Continuous Delivery were business- and customer- related. Businesses need new products and services to remain competitive, and customers expect to be able to interact with a company in efficient, seamless ways.

In other words, from the business perspective, Continuous Delivery is not simply “nice to have.” It is a must-have for businesses banking their futures on accelerating the speed at which software is delivered.

The Catch-22, however, is the fact that velocity is no longer enough. As software becomes increasingly revenue-critical, quality becomes as important as speed. So from the Continuous Delivery perspective, the true challenge is “acceleration with quality” — delivering software faster while ensuring the quality of the end product.

I believe the full value of automation as it supports Continuous Delivery lies at the intersection of speed and quality. Without automation, it’s possible to deliver speed or quality. That is, delivery can be accelerated by shortchanging stakeholder reviews, testing, and quality assurance, or it can be delivered more slowly and with higher quality. However, without unlimited budget and personnel (and maybe even with those things), it is not possible to achieve both speed and quality in the absence of automation.

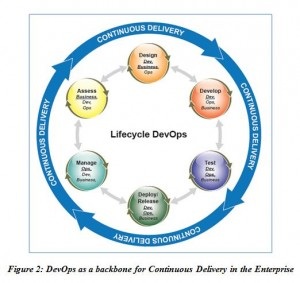

What do I mean by “automation”? Figure 2 depicts an idealized view of DevOps as a backbone for a fully automated, integrated Continuous Delivery lifecycle. Dev, Ops, and Line of Business share responsibility for all aspects of the process including the quality of the final product, with each of the three becoming the “primary” lead (underlined in the diagram) at different stages of the lifecycle.

In this scenario, each stage is appropriately instrumented and automated, and artifacts generated at each stage are shared with the next. Requirements generated during the Design stage, for example, need to be available to subsequent stages to support development, testing, and service level measurements.

Ideally, DevOps encompasses the process- and team-related aspects of the lifecycle, while Continuous Delivery encompasses the multiple iterative loops within the stages AND the handoffs across the stages. Automation facilitates the entire process.

In short, Continuous Delivery can be accelerated to the degree that each stage of the lifecycle is accelerated and the handoffs between stages are optimized. In other words, the Continuous Delivery process is only as fast as its slowest link. This is a great argument for production-grade Application Lifecycle Management (ALM) solutions as well, particularly those with a documented track record supporting accelerated software delivery.

Automation is also one of the best ways to ensure software quality. Automating personnel-intensive tasks such as manipulating test data, building test environments, and deploying software packages minimizes the possibility for human error inherent in multi-step processes. It also supports a build once- run many scenario in which automated processes are controlled by policies, templates, and similar constraints which promote quality assurance. Repeatable processes, data- and metric- driven testing, and predictable deployments all support positive outcomes

If this sounds like smoke and mirrors to you, for the moment you are correct. I have talked with companies of various sizes that have automated the Continuous Delivery pipeline from code check-in to production and admittedly, they do deliver software very quickly. However, virtually all have built their support practices around an expectation of frequent failure. They support their newly accelerated code delivery with fail-safe mechanisms such as “A and B” environments, or with brute force methodologies such as “all hands on deck” scrambles to remediate production failures.

One roadblock is that the process described requires not only high levels of automation, but high levels of integration across the tools supporting the lifecycle. Most of the tools supporting such a lifecycle approach are currently available; however they aren’t easily connected together in a way that supports large-scale automation.

Nevertheless, it’s difficult to move forward without a vision, and virtually all of the products in this space are continually enhanced. Improvements to the integrated lifecycle will likely continue to be delivered, as customers are recognizing that, when speed and quality are equally important to the business, automation is the only viable answer.

To find out more, stay tuned for EMA’s latest findings on the impact of automated DevOps and Continuous Delivery, which will be featured on DEVOPSdigest.

Julie Craig is Research Director for Application Management at Enterprise Management Associates (EMA).

Industry News

Copado announced the general availability of Test Copilot, the AI-powered test creation assistant.

SmartBear has added no-code test automation powered by GenAI to its Zephyr Scale, the solution that delivers scalable, performant test management inside Jira.

Opsera announced that two new patents have been issued for its Unified DevOps Platform, now totaling nine patents issued for the cloud-native DevOps Platform.

mabl announced the addition of mobile application testing to its platform.

Spectro Cloud announced the achievement of a new Amazon Web Services (AWS) Competency designation.

GitLab announced the general availability of GitLab Duo Chat.

SmartBear announced a new version of its API design and documentation tool, SwaggerHub, integrating Stoplight’s API open source tools.

Red Hat announced updates to Red Hat Trusted Software Supply Chain.

Tricentis announced the latest update to the company’s AI offerings with the launch of Tricentis Copilot, a suite of solutions leveraging generative AI to enhance productivity throughout the entire testing lifecycle.

CIQ launched fully supported, upstream stable kernels for Rocky Linux via the CIQ Enterprise Linux Platform, providing enhanced performance, hardware compatibility and security.

Redgate launched an enterprise version of its database monitoring tool, providing a range of new features to address the challenges of scale and complexity faced by larger organizations.

Snyk announced the expansion of its current partnership with Google Cloud to advance secure code generated by Google Cloud’s generative-AI-powered collaborator service, Gemini Code Assist.

Kong announced the commercial availability of Kong Konnect Dedicated Cloud Gateways on Amazon Web Services (AWS).

Pegasystems announced the general availability of Pega Infinity ’24.1™.