Docker, Inc.® announced Docker Hardened Images (DHI), a curated catalog of security-hardened, enterprise-grade container images designed to meet today’s toughest software supply chain challenges.

In my first blog in this series, I highlighted some of the main challenges teams face with trying to scale mainframe DevOps.

Start with Taking a Low-Risk Approach to DevOps for Mainframe Organizations - Part 1

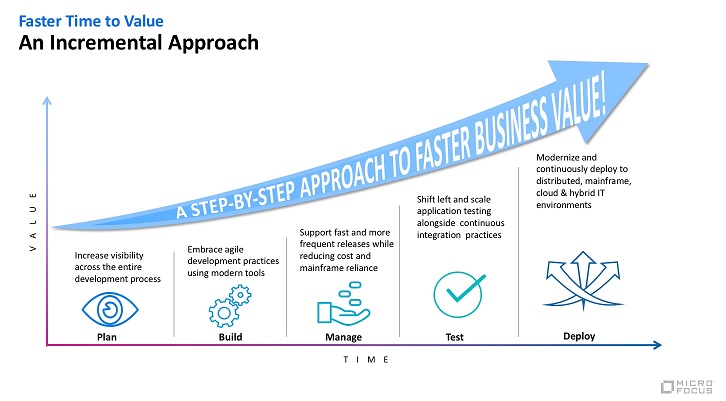

To get past these hurdles, the key is to develop an incremental approach that enables teams to capture value along each step of the journey. With this approach, software bottlenecks are identified and addressed based on the business need – enabling Dev, QA, and Ops teams to work together to deliver better business outcomes. Here are the three major steps for taking an incremental approach to DevOps.

Make Work Visible

The first thing you need to do is "See the System." It's important to get a common view of the work to ensure transparency across the organization. At a system level, you'll need to create a common view of your mainframe deployment pipelines and their interdependencies with distributed environments. This includes highlighting the bottlenecks, waste, and other inefficiencies that can be optimized by using DevOps practices. Ultimately, this common view will serve as the forcing function to help align teams across the organization.

Gaining application visibility, control, and insight will also provide teams with a better understanding of the impact of a software change at the application level. This transparency will allow for better estimates of the work in progress, which can be leveraged to reduce rework and provide early detection of production issues. By embracing this sort of agile development practice and leveraging modern development IDEs, developers will be able to discover issues earlier in the process – boosting productivity and delivering secure features faster to improve collaboration and alignment across both mainframe and distributed teams.

Integrate into the DevOps Toolchain

In order to support faster and more frequent releases, the next step is to ensure the DevOps toolchain is integrated across the entire value stream – from planning phase straight through to managing the application in production. This will allow for a seamless "best of breed" integration across mainframe tools into the broader DevOps toolchain eco-system.

If your current set of tools don't provide adequate integration, it's time to consider upgrading to more modern mainframe solutions. Think about it this way: the more complex your software delivery process is, the greater the need for a flexible, adaptive, and integrated DevOps toolchain.

Furthermore, the integration architecture needs to be open and extensible, so that it's capable of integrating with open source tools while maintaining access to and integrity with core systems and data. This will help reduce your reliance on costly mainframe infrastructure.

Optimize the Mainframe Deployment Pipeline

Once the DevOps toolchain has been integrated, you can begin drilling down into pipeline optimization. The previously discussed common view of the mainframe deployment pipelines should provide guidance around sources of waste and long lead times. Primary sources of application delivery cost and waste include:

■ Lack of understanding of the business requirements leading to high development rework costs and long lead times

■ Too much manual effort in building, provisioning, testing, and deploying applications and environments

■ Too many meetings and slow approval processes around change and release management

■ Failed deployments and production incidents

A good place to start is with automation. Automating mainframe environment provisioning, testing, and deployments will dramatically reduce manual effort, increase deployment frequency, decrease lead times and produce fewer production incidents.

Having the option to automate and re-host mainframe test environments can dramatically accelerate time-to-market at a much lower cost. This is because testing can consume an enormous amount of mainframe processing power, which keeps mainframe costs high. Lead times for mainframe test environments are often days to weeks. Re-hosting pre-production testing from the mainframe onto lower cost platforms can reduce lead times from days to minutes. In addition, re-hosting test environments allows testing to scale up as required with significantly lower operating costs.

Mainframe Teams Need to Take the Initiative

In this incredibly competitive digital economy, the mainframe can serve as a critical competitive differentiator, but only if it participates in the digital transformation of the enterprise. The business requires "on-demand" software delivery, which means mainframe teams have to embrace the DevOps culture of change and continuous improvement.

By breaking out of silos and taking the initiative to implement an incremental strategy to Mainframe DevOps, teams will be able to spend less time focused on delivering applications and more time on doing innovative work that adds real value to the organization.

Industry News

GitHub announced that GitHub Copilot now includes an asynchronous coding agent, embedded directly in GitHub and accessible from VS Code—creating a powerful Agentic DevOps loop across coding environments.

Red Hat announced its integration with the newly announced NVIDIA Enterprise AI Factory validated design, helping to power a new wave of agentic AI innovation.

JFrog announced the integration of its foundational DevSecOps tools with the NVIDIA Enterprise AI Factory validated design.

GitLab announced the launch of GitLab 18, including AI capabilities natively integrated into the platform and major new innovations across core DevOps, and security and compliance workflows that are available now, with further enhancements planned throughout the year.

Perforce Software is partnering with Siemens Digital Industries Software to transform how smart, connected products are designed and developed.

Reply launched Silicon Shoring, a new software delivery model powered by Artificial Intelligence.

CIQ announced the tech preview launch of Rocky Linux from CIQ for AI (RLC-AI), an operating system engineered and optimized for artificial intelligence workloads.

The Linux Foundation, the nonprofit organization enabling mass innovation through open source, announced the launch of the Cybersecurity Skills Framework, a global reference guide that helps organizations identify and address critical cybersecurity competencies across a broad range of IT job families; extending beyond cybersecurity specialists.

CodeRabbit is now available on the Visual Studio Code editor.

The integration brings CodeRabbit’s AI code reviews directly into Cursor, Windsurf, and VS Code at the earliest stages of software development—inside the code editor itself—at no cost to the developers.

Chainguard announced Chainguard Libraries for Python, an index of malware-resistant Python dependencies built securely from source on SLSA L2 infrastructure.

Sysdig announced the donation of Stratoshark, the company’s open source cloud forensics tool, to the Wireshark Foundation.

Pegasystems unveiled Pega Predictable AI™ Agents that give enterprises extraordinary control and visibility as they design and deploy AI-optimized processes.

Kong announced the introduction of the Kong Event Gateway as a part of their unified API platform.

Azul and Moderne announced a technical partnership to help Java development teams identify, remove and refactor unused and dead code to improve productivity and dramatically accelerate modernization initiatives.